AWS Serverless Model Serving

Storing the Model on S3

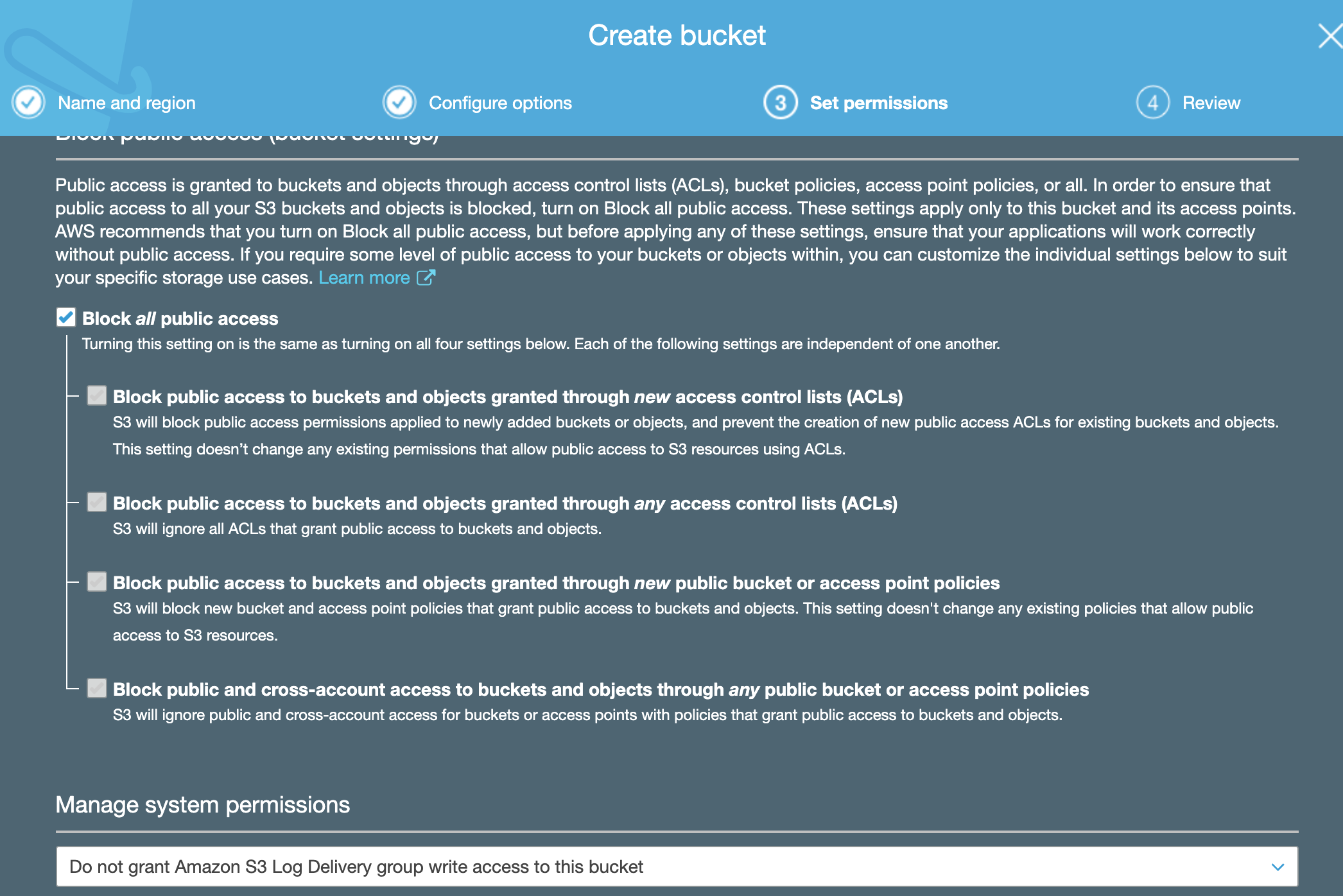

- To set up S3 for model serving, we have to perform a number of steps.

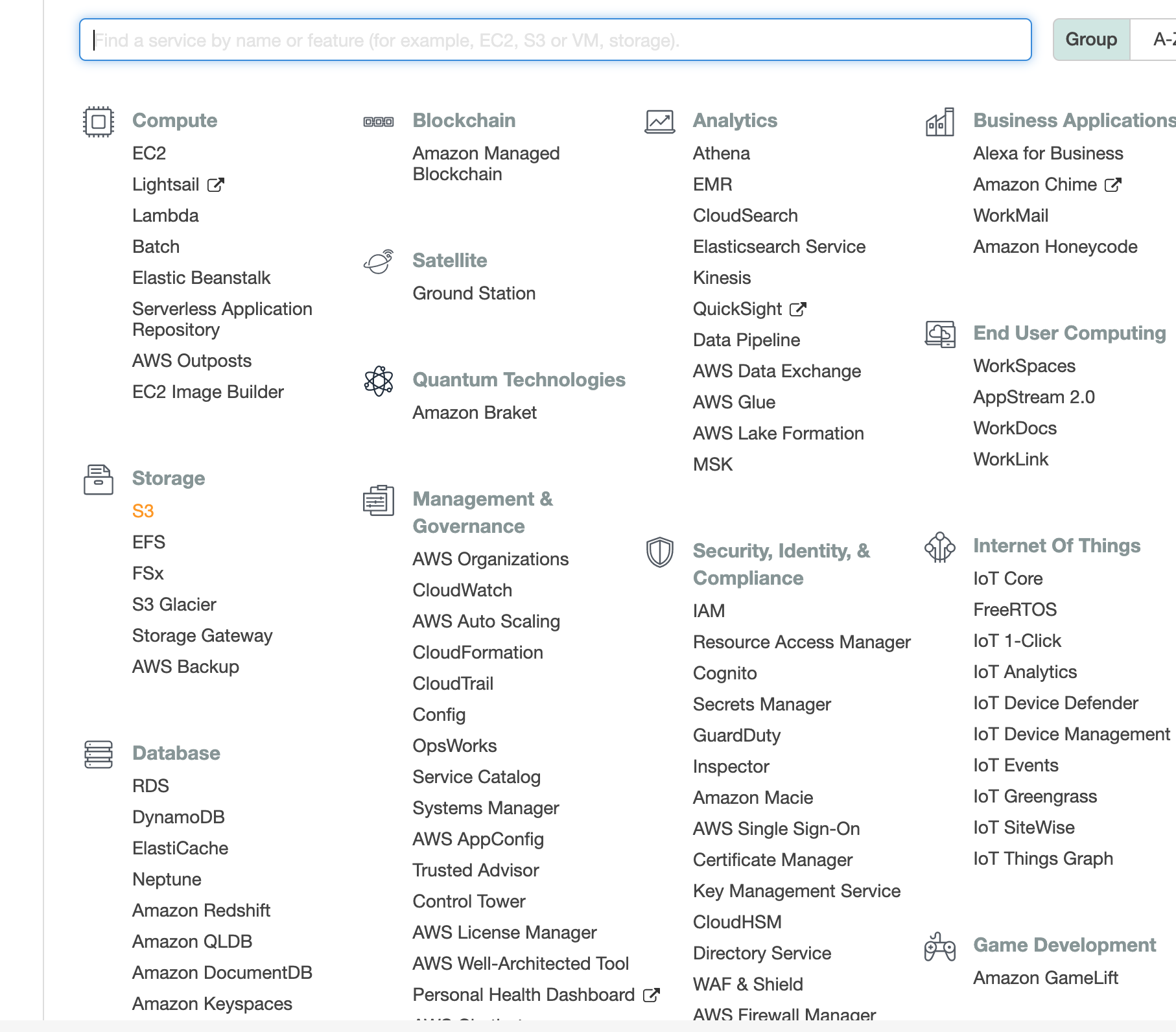

- We start with the s3 page.

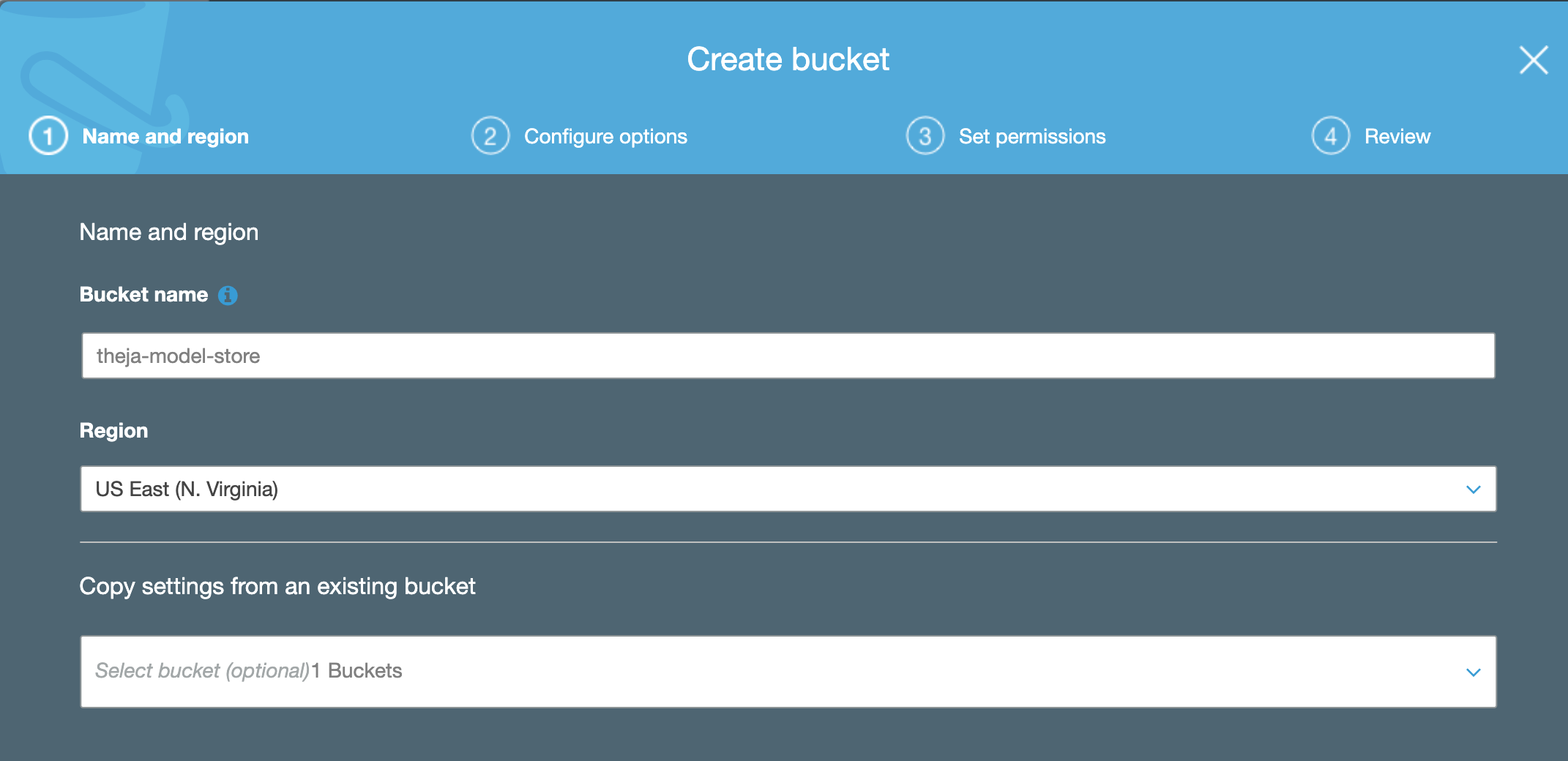

- Create a bucket with an informative name.

- Create a bucket with an informative name.

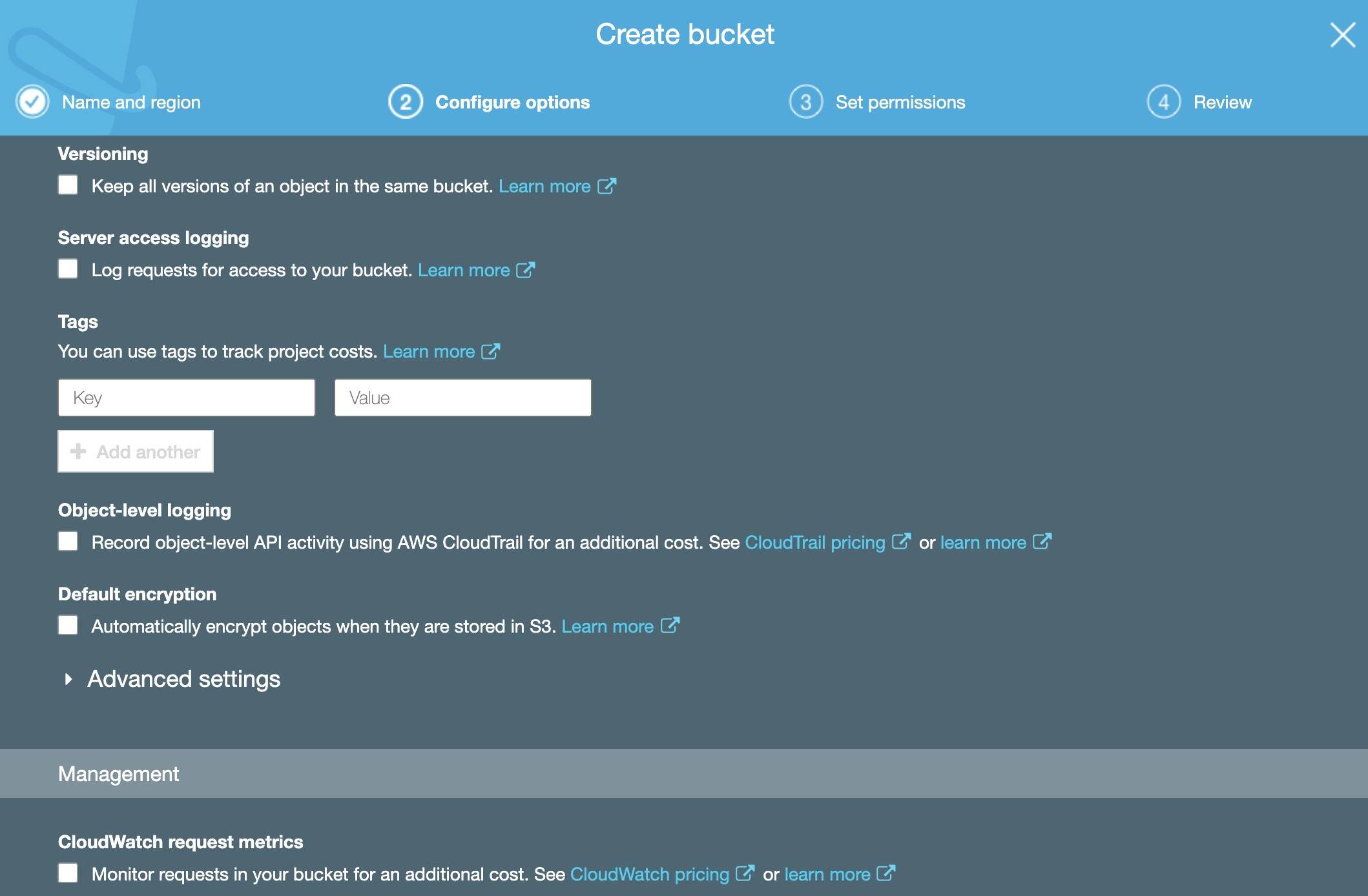

- We don’t have to touch any of these for now.

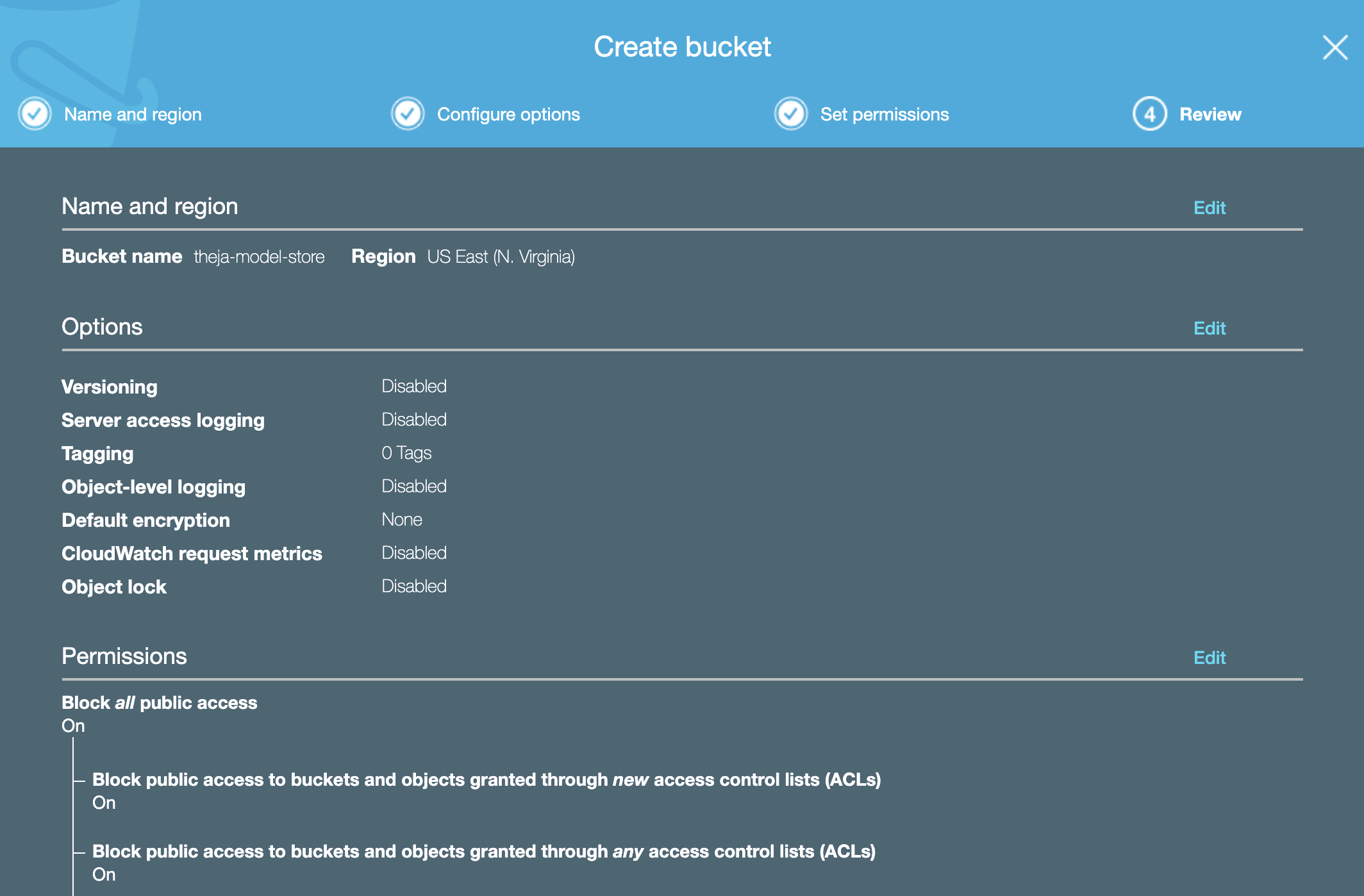

- Here the summary to review.

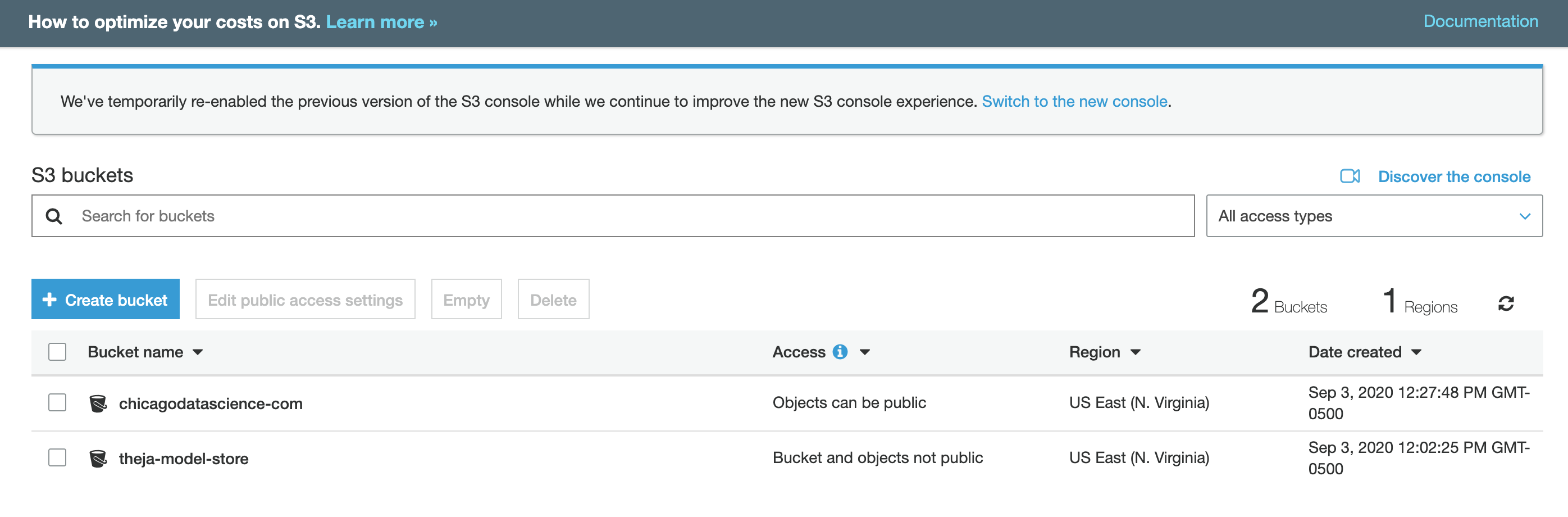

- And we can see the bucket in the list of buckets.

Zip of Local Environment

- We need a zip of local environment that includes all dependent libraries.

- This is because there is no way to specify requirements.txt like in Cloud Functions.

- This zip file can be uploaded to the bucket we created above.

We start with creating a directory of all dependencies.

mkdir lambda_model cd lambda_model # pip install pickle5 -t . #if we had a specific requirement, we would execute this lineFirst we will read the model and the

movie.datfiles (presumably in the parent directory) to create two new dictionaries:#Dumping the recommendations and movie info as dictionaries. from surprise import Dataset import pandas as pd import pickle5 import pickle import json def load(file_name): dump_obj = pickle5.load(open(file_name, 'rb')) return dump_obj['predictions'], dump_obj['algo'] def get_top_n(predictions, n=10): # First map the predictions to each user. top_n = {} for uid, iid, true_r, est, _ in predictions: if uid not in top_n: top_n[uid] = [] top_n[uid].append((iid, est)) # Then sort the predictions for each user and retrieve the k highest ones. for uid, user_ratings in top_n.items(): user_ratings.sort(key=lambda x: x[1], reverse=True) top_n[uid] = user_ratings[:n] return top_n def defaultdict(default_type): class DefaultDict(dict): def __getitem__(self, key): if key not in self: dict.__setitem__(self, key, default_type()) return dict.__getitem__(self, key) return DefaultDict() df = pd.read_csv('../movies.dat',sep="::",header=None,engine='python') df.columns = ['iid','name','genre'] df.set_index('iid',inplace=True) predictions, algo = load('../surprise_model') top_n = get_top_n(predictions, n=5) df = df.drop(['genre'],axis=1) movie_dict = df.T.to_dict() pickle.dump(movie_dict,open('movie_dict.pkl','wb')) pickle.dump(top_n,open('top_n.pkl','wb'))Next we will create the following file called

lambda_function.pyin this directory:import json # top_n = {'196':[(1,3),(2,4)]} # movie_dict = {1:{'name':'a'},2:{'name':'b'}} def lambda_handler(event,context): data = {"success": False} with open("top_n.json", "r") as read_file: top_n = json.load(read_file) with open("movie_dict.json", "r") as read_file: movie_dict = json.load(read_file) print(event) #debug if "body" in event: event = event["body"] if event is not None: event = json.loads(event) else: event = {} if "uid" in event: data["response"] = str([movie_dict.get(iid,{'name':None})['name'] for (iid, _) in top_n[event.get("uid")]]) data["success"] = True return { 'statusCode': 200, 'headers':{'Content-Type':'application/json'}, 'body': json.dumps(data) }Here instead of a request object in GCP, a pair

(event,context)are taken as input. Theeventobject will have the query values. See this for more details.Next we zip the model and its dependencies and upload to S3.

zip -r recommend.zip . aws s3 cp recommend.zip s3://theja-model-store/recommend.zip aws s3 ls s3://theja-model-store/Add the API Gateway as before and see the predictions in action!