Spark Clusters

- The execution environment is typically distributed across several machines (i.e., a cluster).

- We submit jobs to clusters for execution.

- Spark itself is written in Scala/Java. But the Python interface makes it amenable for data science professionals to benefit from it.

Types of Deployments

There are three types:

- Self hosted cluster deployments

- Direct cloud solutions (e.g., Cloud Dataproc by Google Cloud and EMR by AWS)

- Vendor based deployments (e.g., Databricks):

- Here the vendors sit on top of IAAS providers such as GCP, AWS and Azure.

- They try to provide value add in by allowing for easy spark cluster management

- They make it easy to access multiple data science tools

- They also integrate with workflow management tools such as Airflow and MLflow.

Which one should we choose: - Depends on cost benefit analysis - There will eventually be vendor lock-in with cloud and vendor solutions. - But self hosting needs full time engineers round the clock

Cluster

- Is a collection of machines

- One machine is designated as the driver (essentially the master)

- The other machines are called workers

- Tasks are designed to be distributed across worker nodes.

- These nodes are typically susceptible to failure.

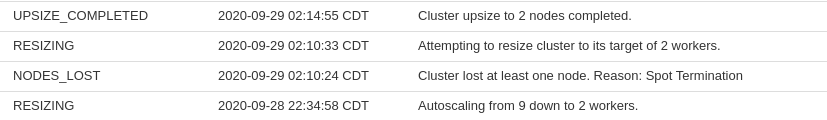

- For instance, these could be spot instances from AWS.

- Because of the transient nature of nodes and tasks mapped to these nodes, the data input/output needs to be handled differently from single machine codebases we have seen so far.

- Unlike sshing into a container to assess bugs after it executed a faulty program, we cannot do the same here (if the node failed, we cannot ssh into it). So monitoring nodes for failures is a value add that some vendors provide.

- Below is an example of a cluster losing its worker node.

PySpark vs Python

- PySpark maps its dataframe to underlying RDDs and allows for manipulation of really large datasets.

- Changes to the dataframe are lazily executed (unlike Pandas). For instance, a select or filtering on rows and columns is not run as a spark job till it is needed for say display or download or processing via pandas.

- When you work in pure python (such as using lists or pandas dataframes), the driver node will function as the single machine instance. This is sub-optimal when you are working with large datasets.

The most challenging aspect of working with PySpark is to figure out which steps in your task (say feature engineering task) are not getting distributed and try to get them distributed instead.

Tip: It is always good to work on a subsample of data to get all steps properly optimized (e.g., keep most operations lazy and cluster dependent rather than driver dependent).